Adult platforms face a unique challenge: they must protect users while allowing freedom of expression. Too many platforms fail because their rules are vague, inconsistent, or impossible to enforce. If your platform hosts adult content - whether it’s art, photography, video, or written material - your community guidelines aren’t optional. They’re the foundation of trust, safety, and legal compliance.

Start with Why: What Are You Trying to Protect?

Before you write a single rule, ask yourself: what are you trying to prevent? Is it non-consensual content? Minors? Coercion? Harassment? Spam? Each of these needs a different approach. You can’t stop everything, but you can stop the worst.

Platforms that survive do so because they focus on harm reduction, not censorship. That means rules that target behavior, not ideas. For example, instead of banning "explicit sexual content," you define: "Any content showing non-consensual acts, minors, or non-human participants is prohibited." Clear. Measurable. Enforceable.

Use Concrete Language - No Ambiguity Allowed

Vague rules are useless. "Don’t post inappropriate content" is a trap. Who decides what’s inappropriate? A user? A moderator? A lawyer? That’s chaos.

Good rules use specific examples. Instead of:

- "Avoid offensive language."

Write:

- "Using racial slurs, threats of violence, or sexually explicit language directed at another user is prohibited. This includes direct messages, comments, and public posts."

Why does this work? Because a moderator can look at a message and say: "Yes, that’s a racial slur. Delete it." No guesswork. No bias. No confusion.

Include real-world examples. Show screenshots (blurred if needed) of what’s allowed and what’s not. Users understand visuals better than paragraphs. A single image of a banned comment with a red X says more than 500 words.

Make Enforcement Fair and Transparent

Rules mean nothing if they’re applied unevenly. Users notice when one person gets banned for a comment and another doesn’t. That’s when trust breaks.

Build a three-tier system:

- Automated filters - for obvious violations like child sexual abuse material (CSAM), which must be blocked before it’s even posted. Use tools like PhotoDNA or similar industry-standard systems.

- Human review - for borderline cases: suggestive content, ambiguous consent, or cultural context. Train your moderators with clear rubrics. Don’t let them wing it.

- Appeals process - every user who gets banned must be able to appeal. Not with a form letter. With a real person who listens. A 48-hour turnaround is the minimum. If you take longer, users will assume the worst.

Post monthly moderation reports. Not just numbers - stories. "Last month, we removed 1,200 posts containing non-consensual content. 85% were caught by automated systems. 15% were reported by users. We upheld 98% of appeals." Transparency builds credibility.

Know the Legal Boundaries - Don’t Guess

Adult platforms operate in a legal gray zone. In the U.S., Section 230 protects platforms from liability for user content - but only if you act reasonably to remove illegal material. In the EU, the Digital Services Act requires you to remove illegal content within 24 hours of being notified.

Ignoring the law is not an option. You don’t need a legal team on retainer - but you do need to know:

- What counts as illegal in your jurisdiction (CSAM, non-consensual intimate imagery, threats)

- How to report illegal content to authorities (NCMEC in the U.S., Europol in Europe)

- When to cooperate with law enforcement (never lie. Never delete evidence. Always log actions.)

Platforms that get shut down usually don’t get shut down for being "too permissive." They get shut down because they ignored clear legal warnings. Don’t be that platform.

Design for User Empowerment - Not Just Control

Good guidelines don’t just tell users what not to do. They empower them to control their own experience.

Let users:

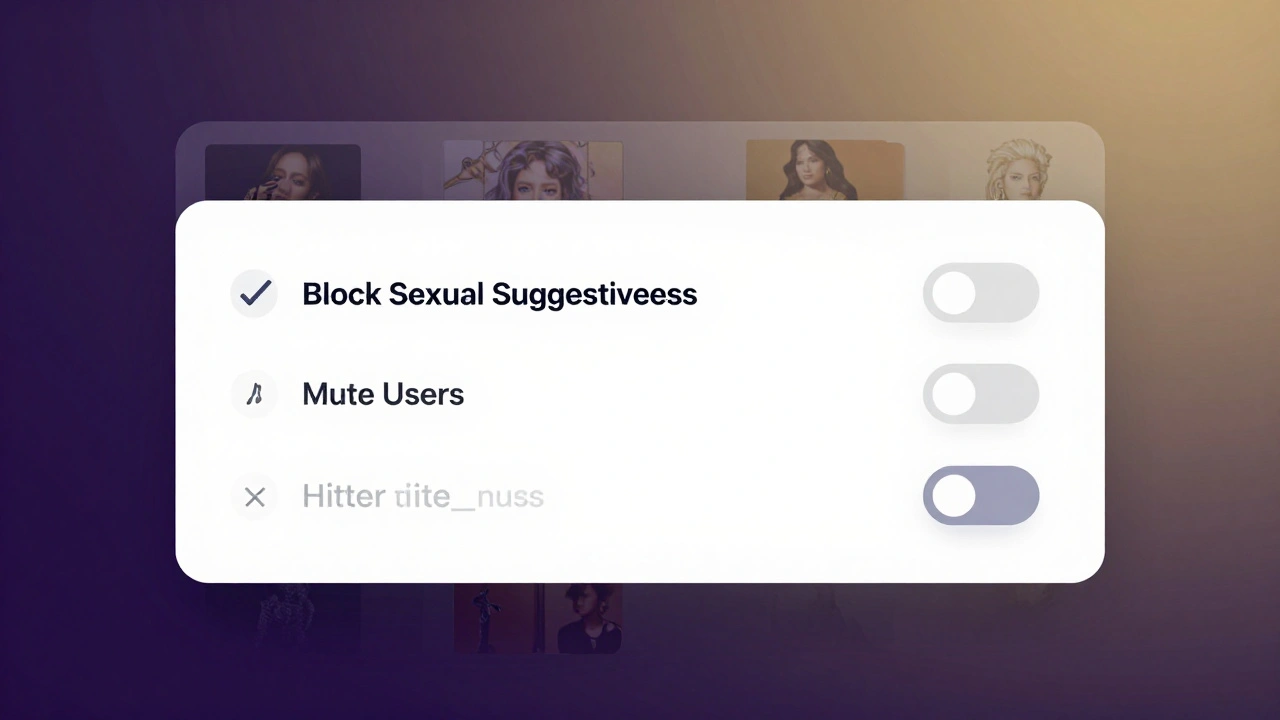

- Customize their content filters - block tags, keywords, or user types

- Report content with one click - and get feedback on what happened

- Choose whether to see sexually suggestive content - toggle it on or off in settings

- Block or mute anyone without needing to explain why

These features reduce the burden on moderators. They also make users feel safe. Safety isn’t just about banning bad actors - it’s about giving users control.

Update Rules Regularly - But Don’t Change Them Arbitrarily

Technology and culture change. Your rules should too. But changing them every month confuses users. Set a rhythm: review guidelines every 90 days.

When you update them:

- Notify users 30 days in advance

- Explain why you’re changing it - "We’ve seen a 40% rise in impersonation scams. Here’s how we’re fixing it."

- Archive old versions - users should be able to see what rules were in place when they posted something

Platforms that update rules quietly - without context - lose trust fast. Users don’t mind change. They mind being kept in the dark.

Train Your Moderators Like Professionals

Moderators are your first line of defense. They’re not volunteers. They’re frontline workers dealing with trauma, harassment, and high-stakes decisions.

They need:

- Clear written guidelines - not just verbal instructions

- Regular training sessions - at least once a month

- Mental health support - access to counselors, mandatory breaks, rotation schedules

- Protection from retaliation - users will threaten moderators. You must act

One adult platform in Oregon reduced appeals by 60% after hiring trained moderators and giving them 10 hours of monthly training. They also added a 15-minute mandatory break after every 20 content reviews. Burnout was cut in half.

Don’t Ignore the Edge Cases

Most platforms fail on the edges. What if someone posts a photo of a tattoo that looks like a minor? What if two adults consent to something that’s culturally taboo? What if a user is using a pseudonym to avoid detection?

Build a "gray zone" committee - a small group of moderators, legal advisors, and user representatives. Let them review hard cases. Document their decisions. Use those decisions to update your rules.

Example: A user posted a photo of a woman in a corset with a vintage 1940s aesthetic. Someone reported it as "sexualized minors." The committee reviewed it: the woman was clearly an adult, the clothing was historical, the context was artistic. They kept it. Then added a tag: "Historical Fashion - Not Minors." Now others can filter it out if they want.

Measure What Matters

You can’t improve what you don’t measure. Track:

- Number of reports per category (CSAM, harassment, spam, etc.)

- Time to respond to reports

- Appeal success rate

- User satisfaction scores (simple survey: "Did you feel heard?" Yes/No)

- Rate of repeat offenders

Use this data to spot trends. If harassment reports spike after a new feature launches, fix the feature. If CSAM reports drop after adding PhotoDNA, double down on it.

Platforms that track metrics don’t just survive - they evolve. They become the gold standard others try to copy.

Final Rule: Be Human

At the end of the day, your guidelines aren’t about control. They’re about connection. People come to adult platforms to explore, express, and belong. Your job isn’t to police them. It’s to make sure they can do so without fear.

Clear rules. Fair enforcement. Real transparency. That’s all it takes. Not perfect rules. Not perfect moderators. Just consistent, honest, and human ones.

What’s the biggest mistake platforms make when writing community guidelines?

The biggest mistake is using vague language like "don’t be offensive" or "keep it respectful." These phrases mean different things to different people. Instead, use specific examples of prohibited behavior. Show, don’t just tell. Users understand rules better when they can picture them.

Can I ban certain types of content without being accused of censorship?

Yes - if you’re clear about why. Banning non-consensual content, child exploitation, or threats isn’t censorship. It’s harm reduction. The key is to separate moral judgment from safety. Your rules should protect users, not enforce your personal beliefs. Focus on behavior that causes real harm, not on content that makes some people uncomfortable.

How often should I update my community guidelines?

Review them every 90 days. Don’t change them every week - that confuses users. But don’t leave them unchanged for years either. Technology, laws, and social norms shift. Update when you see new patterns: rising reports of a certain type of abuse, new forms of scams, or changes in local laws. Always notify users 30 days before changes take effect.

Do I need a legal team to create these rules?

Not necessarily - but you need to understand the law. You don’t need a lawyer on retainer, but you do need to know what’s illegal in your region. For example, in the U.S., CSAM is always illegal. In the EU, non-consensual intimate imagery is illegal under the Digital Services Act. Use free resources like NCMEC’s guidelines or the IWF’s best practices. If you’re unsure, consult a lawyer for a one-time review.

What should I do if a user reports a violation but I can’t verify it?

If you can’t verify, don’t act. But don’t ignore it either. Send a reply: "We reviewed your report and couldn’t confirm a violation based on our guidelines. If you have additional evidence, please share it." This keeps users engaged and shows you’re serious. Never delete a report just because it’s hard to prove. Track all reports - even the ones you can’t act on. Patterns matter.

How do I train moderators to handle traumatic content?

Start with boundaries: limit their daily review load. Require 15-minute breaks after every 20 content reviews. Offer access to trained counselors. Rotate moderators between high- and low-trauma tasks. Never let one person handle CSAM reports alone. And always, always pay them fairly. Moderation is mental labor. It’s not a volunteer job.

Should I allow appeals for bans?

Yes - and make it fast. A 48-hour turnaround is the minimum. Users who feel unheard will leave - or fight back. An appeals process isn’t just fair. It’s smart. It catches mistakes. It reduces repeat complaints. And it builds trust. Even if you uphold 95% of bans, the 5% you overturn shows you’re not just automated.

Can I use AI to automatically ban users?

AI can help - but never replace humans. AI is great at spotting known CSAM or spam. But it can’t understand context. A photo of a tattoo might look like a minor. A consensual role-play might be flagged as coercion. Use AI to flag, not decide. Always have a human review before banning. Mistakes made by AI are irreversible - and devastating.