Every day, thousands of private images and videos are turned into fake content without consent. These aren’t movie effects or joke edits-they’re real people, often women, forced into explicit scenes they never agreed to. The rise of AI tools has made this easier than ever. A 2025 report from the Cyber Civil Rights Initiative found that over 90% of non-consensual deepfakes in adult media target women, with 80% of cases involving ex-partners or strangers who obtained images through hacking or social engineering. The damage isn’t just emotional-it’s legal, financial, and professional. So how do we stop it? And more importantly, how do we detect it before it spreads?

What Makes a Deepfake Non-Consensual?

A deepfake becomes non-consensual when someone uses another person’s likeness-face, voice, body-to create sexually explicit material without their permission. It doesn’t matter if the original photo was taken in private or public. Once it’s turned into something explicit without consent, it’s a violation. These aren’t just distorted images. Modern tools like Stable Diffusion, FaceSwap, and DeepFaceLab can generate hyper-realistic videos that look like real people having sex. Some are so convincing that even experts struggle to spot them without specialized software.

The real danger? These videos often show up on public forums, dating apps, and adult content platforms. Once posted, they spread fast. Algorithms designed to promote engagement don’t distinguish between real and fake. They just push what gets clicks. That means a victim’s face can appear on hundreds of sites within hours, with no way to trace who made it or how to remove it.

How Detection Tools Work Today

Detection isn’t about looking for bad pixels. It’s about finding patterns AI can’t hide. Tools like Sensity, Deeptrace, and Microsoft’s Video Authenticator analyze subtle inconsistencies that humans miss but machines leave behind. These include:

- Unnatural blinking patterns-AI often forgets to make eyes blink naturally

- Mismatched lighting between face and body

- Asymmetrical facial features that don’t match real-life photos

- Audio sync errors where lip movements don’t match voice timing

- Texture anomalies in skin or hair that AI struggles to replicate accurately

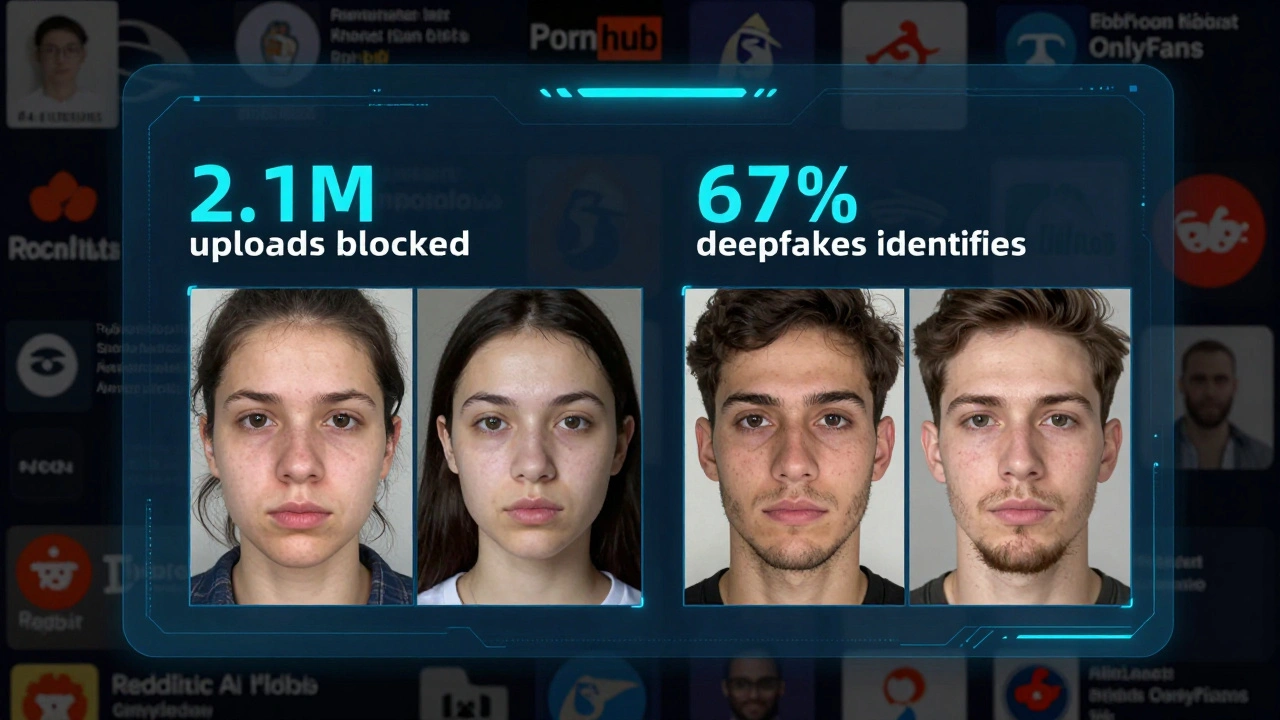

Platforms like Pornhub and OnlyFans now use automated detection systems that scan uploads in real time. In 2024, Pornhub reported blocking over 2.1 million uploads flagged by AI detectors, with 67% of those being non-consensual deepfakes. But detection isn’t perfect. False positives happen. So do false negatives. A 2025 Stanford study showed that current tools still miss about 18% of deepfakes made with newer models like Sora and Luma AI.

Some tools now use blockchain-based watermarking. If a real photo is uploaded to a trusted platform, it gets a digital fingerprint stored on a public ledger. If someone tries to use that image to create a deepfake, the system can compare the source and flag it. This is still experimental, but early tests by the EU’s Digital Services Act show promise.

Policies That Actually Work

Legal bans alone don’t work. You can’t arrest every person who uploads a deepfake on a dark web forum. But some policies have made a measurable difference.

In 2023, the UK passed the Online Safety Act, which requires platforms to proactively detect and remove non-consensual intimate imagery. Platforms must now use approved detection tools and report removal rates to Ofcom. Failure to comply means fines up to 10% of global revenue. Since then, reported deepfake cases on UK-based platforms dropped by 41%.

California’s AB 1687 law, updated in 2025, makes it illegal to create or distribute deepfakes of public figures or private individuals for sexual purposes-even if the person isn’t famous. It also forces platforms to provide a clear, one-click reporting tool for victims. Within six months of the law going live, over 14,000 reports were filed. Of those, 92% were confirmed as deepfakes.

Not all policies are good, though. Some countries have tried banning AI tools outright. That doesn’t work. Developers just move offshore. Others require users to upload ID before posting. That creates privacy risks and doesn’t stop someone who already has a victim’s photo.

What Platforms Are Doing Right

Not all platforms are ignoring the problem. A few have taken real action:

- OnlyFans now uses a combination of AI detection and human review teams. They also offer free legal support to victims whose images are misused.

- Reddit banned all subreddits dedicated to non-consensual deepfakes in 2024 and now auto-flags any post containing known victim faces from a shared database.

- Google updated its search algorithm to demote sites hosting deepfake content, even if they claim to be "educational." This reduced traffic to 3,000+ abusive sites by 89% in one year.

- TikTok introduced a "Report Deepfake" button that triggers an immediate takedown within 2 hours if confirmed by their review team.

These aren’t perfect, but they’re moving in the right direction. The key? They combine technology with human oversight and victim support.

What You Can Do If You’re Affected

If you’re a victim, time matters. The sooner you act, the fewer places the video spreads.

- Take screenshots of where the video appears-save URLs, timestamps, and usernames.

- Use a trusted reporting tool like Without My Consent or Deepfake Report to submit your case. These services work with platforms and law enforcement.

- File a report with your local cybercrime unit. Many now have specialized teams for deepfake cases.

- Contact a legal aid organization like the Cyber Civil Rights Initiative-they offer free help to victims.

- Don’t delete your own original photos. They’re evidence. Store them securely offline.

There’s no magic button to erase the internet. But you don’t have to fight alone. Support networks exist, and more platforms are building tools to help.

Why This Isn’t Just a Tech Problem

Deepfakes aren’t just about bad code. They’re about power. The people making these videos aren’t hackers in basements-they’re often people with access to private images: exes, coworkers, or strangers who paid for leaked content. The real issue? We treat this like a technical glitch instead of a crime against dignity.

Until we change how we see these acts-as sexual violence, not just "online drama"-we’ll keep treating symptoms instead of causes. Detection tools help. Laws help. But real change comes when society refuses to look away.

Can deepfake detection tools block all non-consensual content?

No. Current tools catch about 82% of deepfakes, but newer models using generative AI like Sora and Luma AI are harder to detect. False negatives are still common, especially when the deepfake uses high-quality source images. Detection works best when combined with human review and user reporting.

Are there free tools to check if my image was used in a deepfake?

Yes. Tools like Deeptrace and Reality Defender offer free scanning for users who upload suspected images. Some services, like Google’s Safe Browsing and Without My Consent, let you submit URLs for analysis. These don’t guarantee removal but help identify where your image appears.

Is it illegal to create a deepfake of someone in the U.S.?

In most cases, yes. Federal law doesn’t yet have a specific ban, but 48 states have laws against non-consensual intimate imagery. California, New York, and Illinois now include deepfakes under these laws. Creating or distributing a deepfake for sexual purposes can lead to felony charges, fines, and prison time.

Do social media platforms remove deepfakes automatically?

Some do, but not all. Platforms like TikTok, OnlyFans, and Reddit use AI to auto-detect and remove known deepfakes. Others, especially smaller forums or international sites, rely on user reports. If a platform doesn’t have a clear policy, the video may stay up for weeks or months.

Can I get a deepfake removed from the dark web?

Removing content from the dark web is extremely difficult. Law enforcement agencies sometimes take down servers, but new copies are often made. The best approach is to report the deepfake to organizations like the Cyber Civil Rights Initiative-they work with international partners to trace and delete copies when possible. Prevention and early detection are more effective than removal after the fact.

There’s no single fix. But progress is happening. Detection tools are getting smarter. Laws are catching up. And more people are speaking out. The goal isn’t to erase AI-it’s to make sure it doesn’t erase people.